Building with reasoning models: infrastructure requirements and verification loops

Explore how reasoning models are transforming AI with structured verification loops and deep research capabilities.

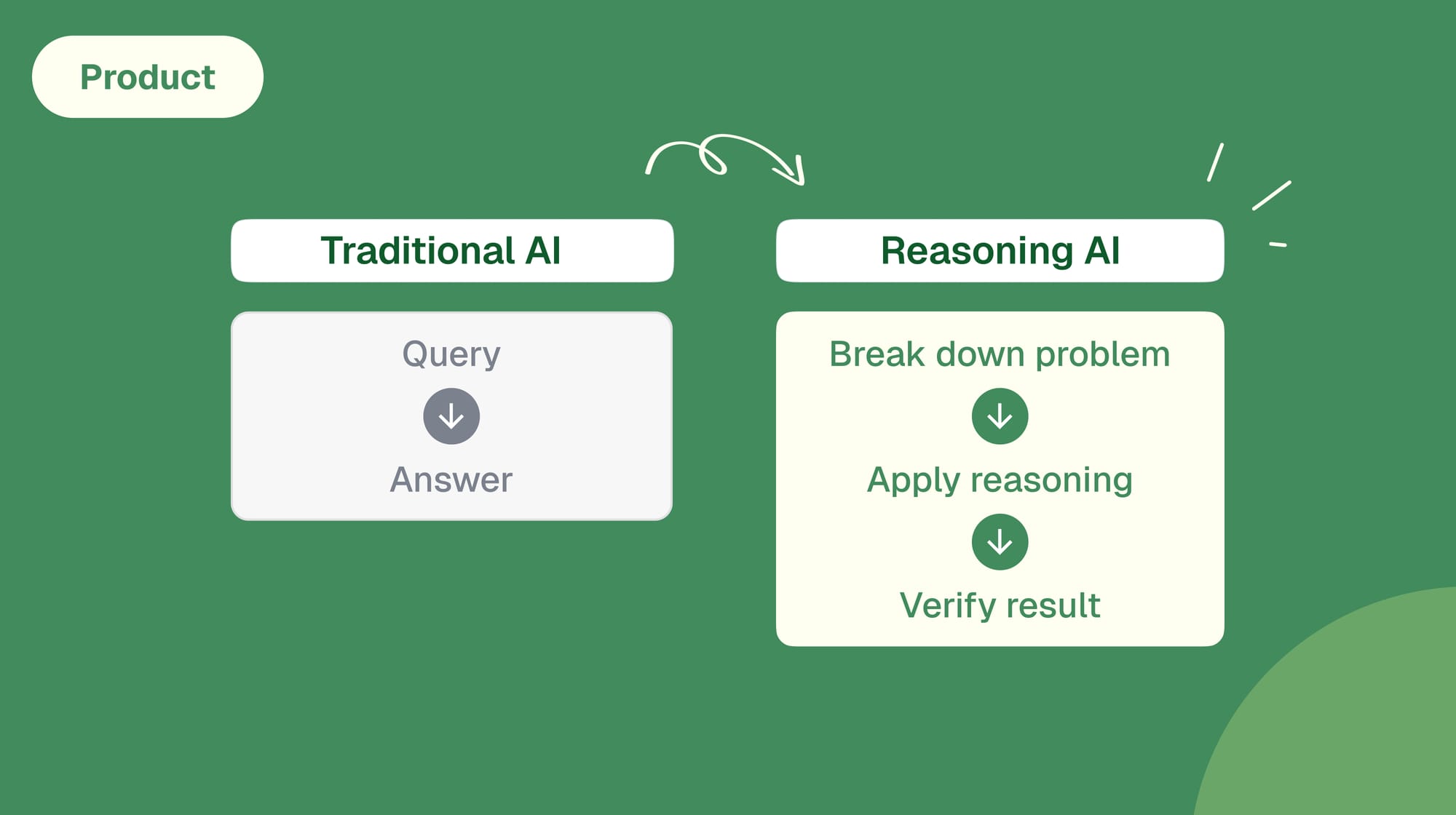

The AI landscape shifted in the last months with the emergence of reasoning-focused models. These models bring a fundamental change in how LLMs approach complex tasks. Rather than generating quick, probabilistic answers, they implement structured verification loops that dramatically improve reliability.

Watch our webinar on the topic

From direct answers to reasoned solutions

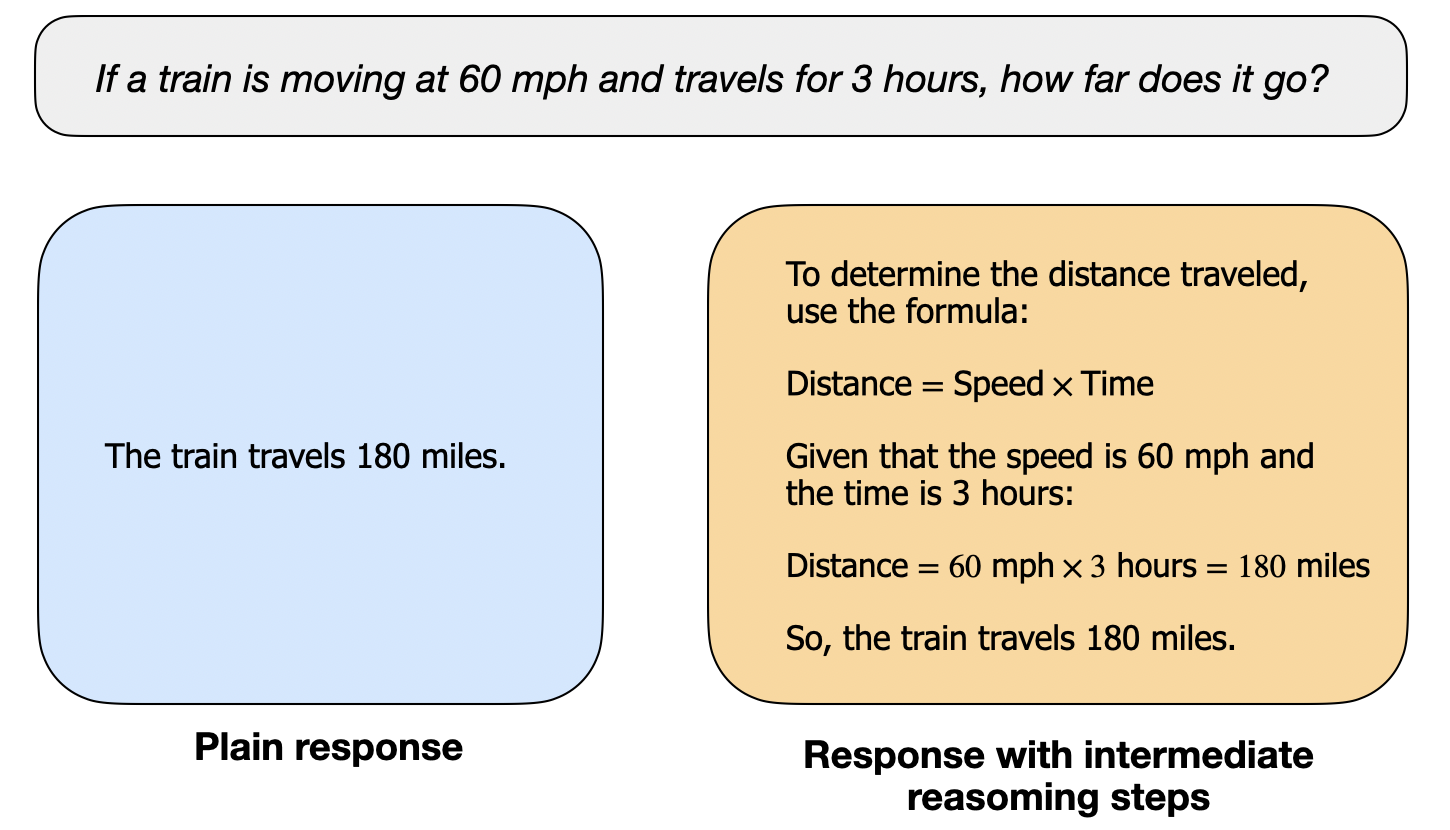

Consider a simple example: calculating distance traveled by a train moving at 60 miles per hour for 3 hours. A standard model responds directly: "180 miles." A reasoning model breaks down the problem:

- Identify relevant variables: speed (60 mph) and time (3 hours)

- Apply distance formula: distance = speed × time

- Calculate: 60 × 3 = 180 miles

- Verify result matches units and problem constraints

While both reach the correct answer, the reasoning approach demonstrates its value on complex tasks. Our evaluations show reasoning models achieve 96% accuracy on AIME-level mathematics problems, compared to 65% for standard models.

Deep research through agent orchestration

At Dust, we've built internally on this verification capability to enable deep research through recursive agent calls. Our implementation allows our @research agent (showed as an example in the webinar) to:

- Break complex queries into subtasks

- Delegate research to specialized subagents

- Synthesize findings into comprehensive reports

- Validate conclusions against source material

In practice, this produces striking results. Testing identical prompts, our research agent cited 14 distinct sources versus 5 for standard models. The difference stems from systematic exploration of available data sources and cross-validation of findings.

The cost-reliability trade

Reasoning models exchange compute cost for reliability. They consume more tokens during verification loops but deliver substantially higher accuracy. This shift addresses what we call the "uncanny valley hypothesis" - as task complexity increases, required reliability must scale proportionally.

The economics work because token costs continue to decrease while task value remains constant. For enterprise workflows where verification time exceeds 30 minutes, the investment in reasoning capabilities becomes essential.

Infrastructure implications

Supporting reasoning models requires new infrastructure primitives:

- Deep data access across enterprise systems

- Permission layers for agent orchestration

- Asynchronous command center interfaces

- Standardized action protocols like MCP

We're building Dust to provide this foundation, positioning ourselves as both client and server in the emerging Model Context Protocol ecosystem.

Looking ahead: three major shifts

The rise of reasoning models triggers three fundamental changes:

- Interface Evolution: moving from conversational UI to command center paradigms where humans brief, review plans, and validate results asynchronously

- Integration Depth: shifting from shallow connections (code interpretation, web browsing) to comprehensive enterprise data access

- Productivity Impact: advancing from percentage gains to multiplier effects through reliable automation of complex workflows

Implementation considerations

For teams adopting reasoning models, focus on:

- Structured output validation through shadow reads

- Cache optimization for recursive agent calls

- Permission modeling aligned with existing enterprise systems

- Token economics optimization through consensus pools

The shift toward reasoning represents a substantial capability expansion for AI systems. While not eliminating the need for human oversight, it enables reliable delegation of increasingly complex tasks.

We're entering an era where AI assistance evolves from quick answers to reasoned analysis. The enterprises that adapt their infrastructure and workflows to leverage this shift will see dramatic acceleration in their operational capabilities.

Join us in building this future. We're hiring engineers focused on agent orchestration, UI/UX specialists designing human-AI interfaces, and security experts hardening enterprise AI systems. Learn more at dust.tt/jobs