Permissions in the age of AI-driven companies

As AI agents become more autonomous and capable, we're discovering that traditional approaches to permissions and access control break down. At Dust, we're building the foundational layer to handle these new requirements. Let me explain why this matters and how we're approaching it.

The fundamental tension

Traditional permission models are built around human access patterns: "Can Alice access document X?". But as agents become more autonomous, this model falls short. Consider this scenario: an HR team creates an agent to help employees with policy questions. The agent needs access to internal HR documents that most employees can't directly access. If we were to apply the requesting employee's permissions, the agent would fail its core purpose.

A new permission layer

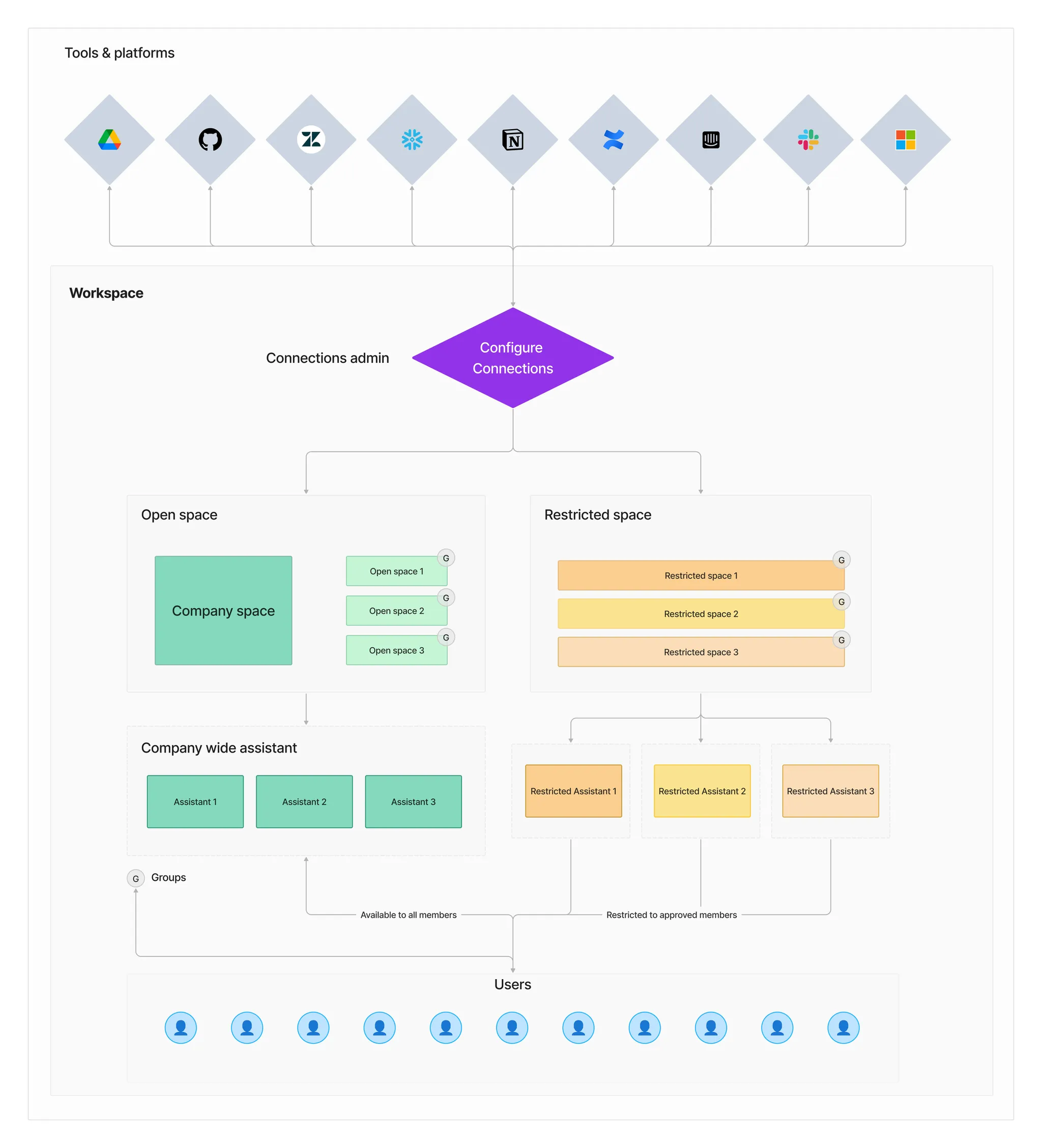

Dust introduces two fundamental concepts to handle permissions:

- Spaces: Containers that segment company data. Spaces can be open (accessible to all) or restricted (limited to specific groups).

- Groups: Collections of humans that can be automatically provisioned through your company's identity provider (SCIM).

These abstractions enable the dual-layer permission model that we believe is required to operate AI agents at scale.

- Agent <> Data: When an agent is created based on data coming from a space the creator has access to, it inherits the access right to the data that has been used (and no more). This access persists independently of who uses the agent, ensuring consistent behavior. For example, an HR agent created in the HR space will always have access to HR space data it is based on, even in the event of a use by employees who don't have direct access to these documents (see below).

- Human <> Agent: By default the agent's use is restricted to users having access to all the spaces related to the data the agent is based on. This ensures safety by default. Administrators can validate overwrites to release the access of selected agents to specific groups.

This dual-layer permission model creates a clear separation between data access and agent usage rights. For instance, while the HR agent has access to sensitive HR data, it can be made available to all employees to answer policy questions safely.

The data warehouse parallel

This might feel like extra overhead – it is. But we've seen this pattern before with data warehouses, where companies maintain separate access policies despite having existing permissions in source systems. Why? Because the value of unified analytics outweighs the cost of managing additional permissions.

We believe the same will be true for AI agents, but at an even larger scale. As agents become a new workforce within organizations, the ability to properly permission them becomes mission-critical. The productivity gains from having agents that can securely access and act on company data will far outweigh the overhead of managing their permissions.

Looking forward

The traditional private by default—public when necessary approach that most companies operate under today has become a structural impediment to AI adoption. Information silos, while historically seen as a prudent security measure, now actively prevent AI systems from delivering their full potential value. We believe the companies that will extract the most value from AI are those willing to flip this paradigm to public by default—private when necessary. This shift recognizes that AI systems, unlike humans, create value precisely by making connections across traditionally siloed information, while still maintaining strict controls around genuinely sensitive data.

As AI agents become more integral to company operations and become a new workforce within organizations, having a clear system of record for agent permissions will be as critical as having one for human permissions. Dust aims to be this system of record, providing the infrastructure layer that lets companies safely deploy AI at scale.

We're not just building a permission system – we're building the operating system for the AI-driven company. Just as Windows provided universal UI primitives that made all applications more productive, Dust provides universal AI primitives that make all company workflows more intelligent.

The future of work will be defined by how effectively humans can orchestrate AI agents. Having the right permission infrastructure isn't just an enabler – it's the foundation of this future.

We're hiring! Join us to define the future of how we work and operate with intelligent machines. From UI/UX to security, the future has yet to be invented: https://dust.tt/jobs