Behind-the-curtains: how we conducted our first major architecture transition with no downtime

How our eng team orchestrated a major migration, improving security, performance, and scalability while enabling better AI agent capabilities.

We recently completed a significant infrastructure project that reorganized how data flows through our infrastructure. This behind-the-scenes work lays the groundwork for exciting product improvements like better keyword search capabilities and more intuitive file organization. It's a perfect example of how technical investments can both solve existing pain points and unlock new product possibilities.

Context

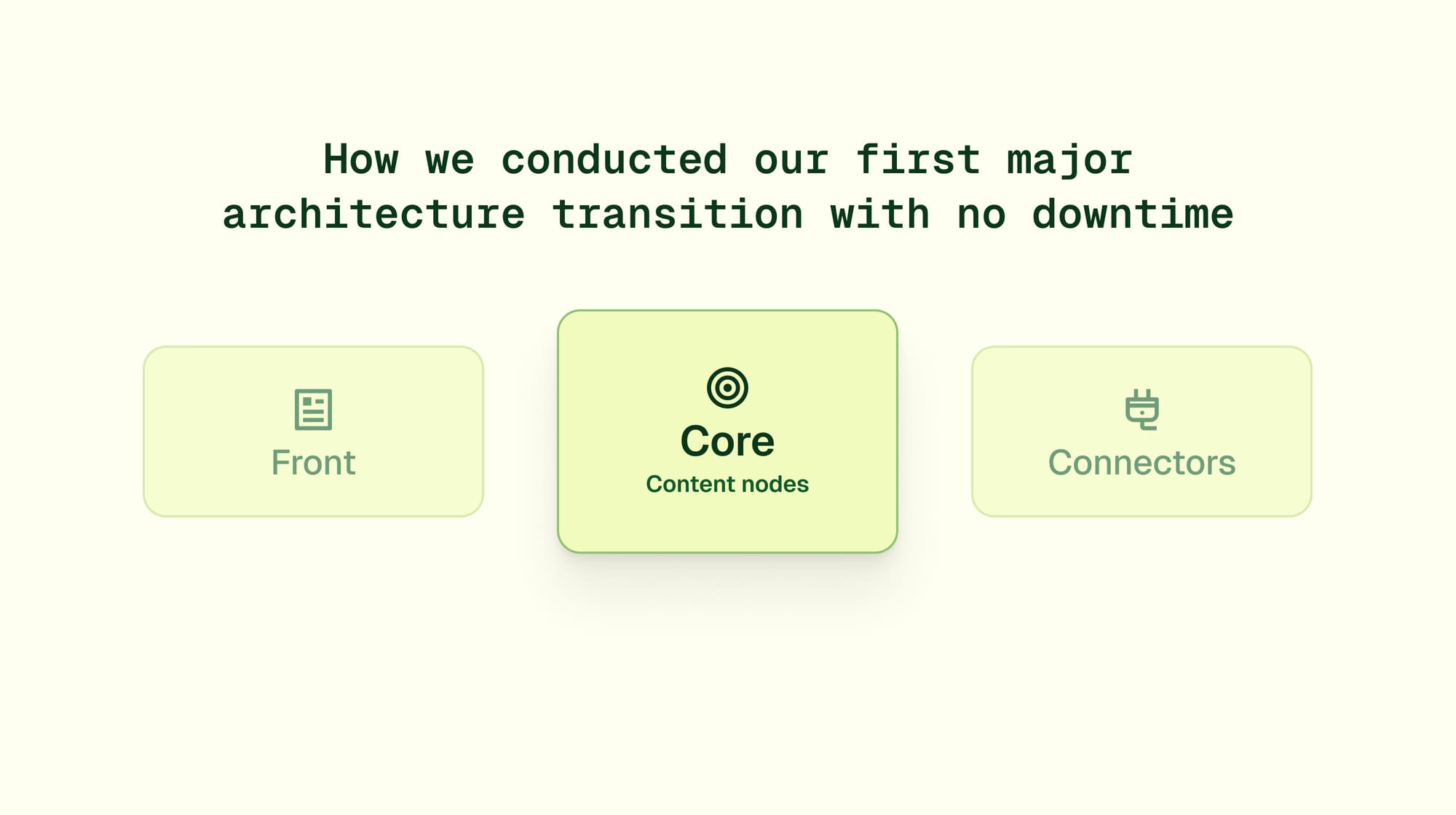

At Dust, our product is structured around three primary services:

- A Next.js application called

frontthat serves our user-facing product. - A service written in Rust called

corethat acts as a backend forfrontand leverages customer data to provide raw capabilities to models such as RAG. - A TypeScript component called

connectorsthat acts as an orchestration workflow between each connection provider (Slack, Notion, Google Drive, …) and the rest of our infrastructure.

connectors fully encapsulates the provider-specific logic and exposes a unified API to the other services.

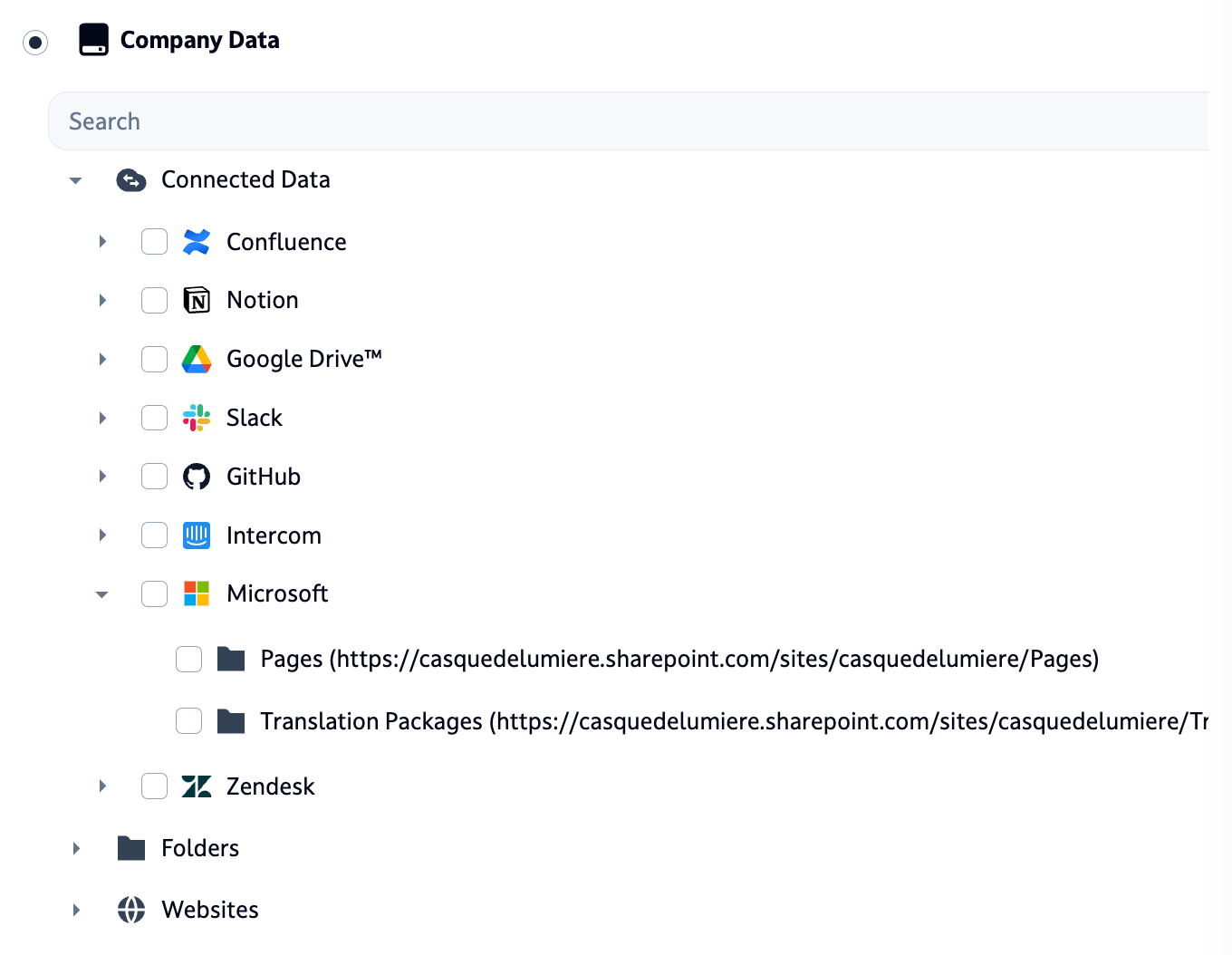

Up until recently, it was responsible for defining and providing what are called “Content Nodes” in Dust, which are a representation of any piece of data we synchronize for RAG or other capabilities: Notion pages, Slack threads, Zendesk tickets, … The content node hierarchy is displayed throughout the product for both knowledge management and agent configuration.

We recently completed a significant architectural change by moving the content nodes hierarchy from the connectors service to the core service. While this might sound like purely technical refactoring, it represents a fundamental improvement to our platform's architecture and unlocks several product capabilities.

Initially, our implementations for each Connection had their own custom logic for retrieving content nodes: our GitHub connection organized issues under repositories, Confluence’s one structured pages under spaces, and so on. Meanwhile, our core service managed the actual data without any hierarchical context. After having built several connectors, we gained the perspective needed to see an opportunity to integrate both the data and its hierarchy into a single, unified interface.

Why we made this change

Technical improvements

Permissioning in Dust is centered around the notion of spaces: each user has access to certain spaces, and administrators select the subset of the company data that is exposed to each space.

The migration moved security enforcement to a deeper level in our architecture. Rather than fetching all the data from our connectors service and then filtering it in the web app's backend, our new endpoint in core supports passing a space filter and inherently limits results to data within the requester’s permissions, moving the filtering one step earlier. This architectural shift not only reinforces data segregation between spaces but also reduces unnecessary data transfer, since filtering happens at the source rather than after retrieval.

We also simplified a persistent data consistency problem. The implementation of each connection had to ensure that the content nodes that are exposed to front were consistent with the data that was indexed in core, through extensive code sharing and testing. Passing along to core the hierarchy for a node alongside the data to index enforces consistency by design, simplifying our existing codebase and increasing our velocity in integrating Dust with new connection providers.

The project let us cut an unnecessary dependency between front and connectors for data source management. This might seem trivial, but removing this dependency made our architecture cleaner and easier to maintain, with fewer moving parts that could break.

Perhaps most importantly, we consolidated logic that was previously scattered across different connectors. Instead of maintaining separate implementations for each connector's hierarchy management, we now have a single implementation in core. When we need to fix issues with the hierarchy, we fix them once, not nine separate times.

The performance gains have been substantial, too. Page loading is noticeably faster with the new implementation, and we can now handle massive folder structures with thousands of files without breaking a sweat.

Product enablers

This migration doesn't directly add new features, but it lays crucial groundwork that will make several planned product improvements possible. With this foundation in place, we're now positioned to significantly improve company data management and agent configuration by simplifying content node selection across massive data sources.

The UI could get a boost, too, with specific icons for different file types, making the interface more intuitive and scannable. With the pagination infrastructure now in place, we'll be able to handle large data sources more elegantly in future iterations, providing a smoother experience when browsing extensive content collections.

Implementation approach

The core challenge of this migration was data consolidation. We had to bring together disparate data models and hierarchical structures from nine different connectors, each with their own quirks and implementation details, into a single, coherent system in Core. This consolidation effort required careful mapping of concepts, standardization of fields, and creation of a unified data model that could represent all the variations we needed to support. We had to ensure the core implementation could handle all the edge cases that individual connectors had developed over time, without breaking existing functionality.

An interesting architectural constraint was that our core service is deliberately provider-agnostic – it has no context about which specific provider (Slack, Google Drive, GitHub, etc.) a piece of data comes from. This design choice promotes a clean separation of concerns and makes our core more maintainable, but it presented unique challenges for this migration. We had to meticulously identify all provider-specific logic that had been baked into the connectors and either extract it to a shared layer or find provider-agnostic alternatives. In some cases, we needed to encode provider-specific information into standardized fields like mime types, ensuring that core and then front could make appropriate decisions without knowing the underlying provider.

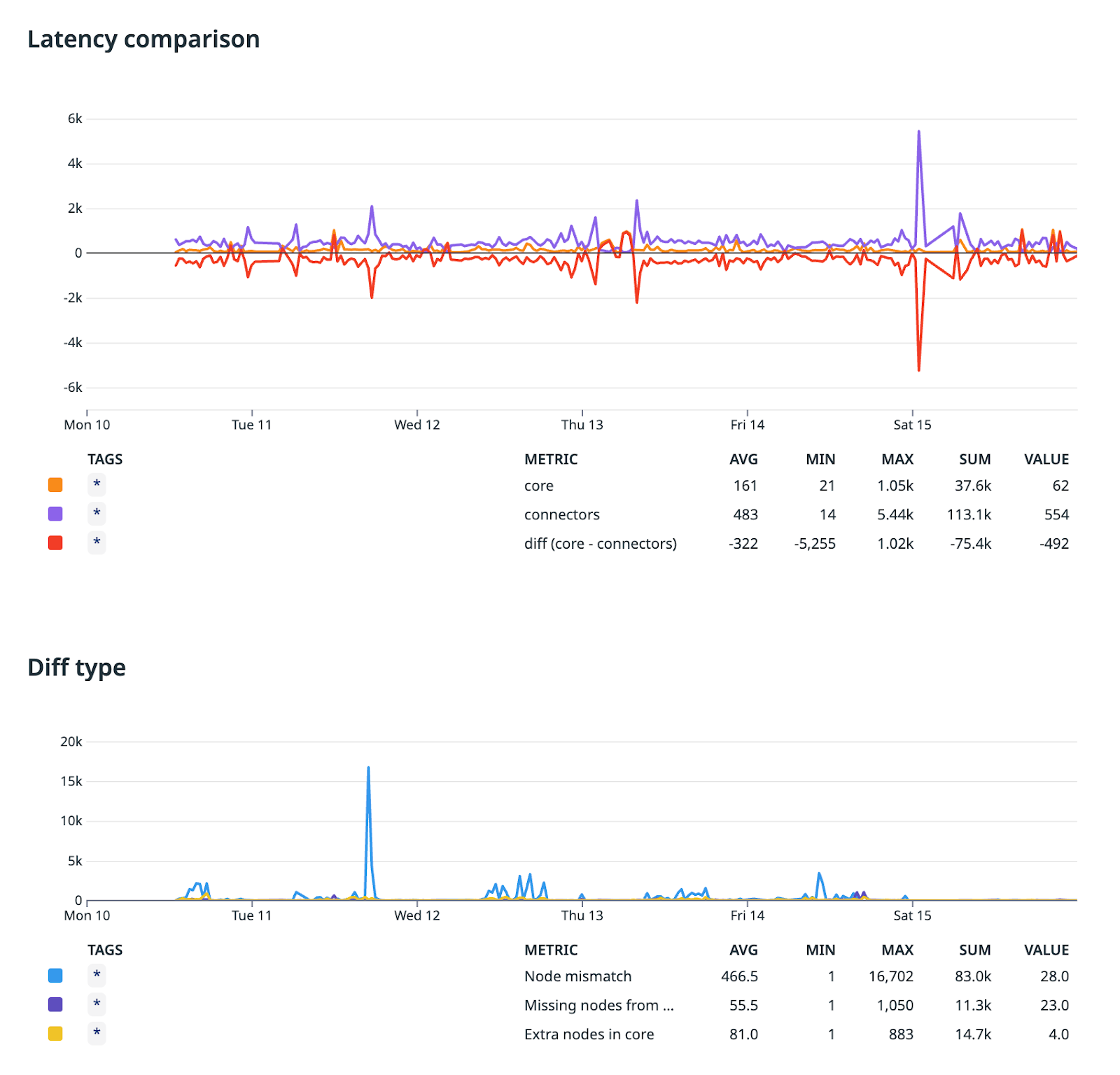

Our implementation strategy balanced thoroughness with safety. We added the missing metadata fields to the node model and standardized mime types across all connectors to ensure consistency. The project was like pulling a thread on an old sweater; we started fetching the data from core before completing the migration, and every piece of metadata that was not migrated yet became immediately apparent. The cornerstone of our approach was a shadow read system that ran both implementations in parallel, letting us compare results in real time and progressively roll out the migration without breaking existing functionality.

We tracked discrepancies through a Datadog notebook, giving us visibility into issues as they emerged. This dual-system approach meant we could methodically address inconsistencies before entirely switching over.

We maintained rollback capability for a full week after deployment - a safety net that gave us the confidence to proceed while ensuring we could revert if any serious issues emerged. And we didn't just test with small datasets; we deliberately verified performance with our largest data sources to ensure the solution would scale in production.

A key architectural decision was using a single endpoint for content nodes in core. This approach avoided duplicating logic and made signature changes smoother than creating separate endpoints would have. It also ensured that our security filters would be consistently applied across all hierarchy requests.

Conclusion

This migration is more than just a technical refactoring—it's a foundation for future product improvements. By bringing Content Nodes into core, we've simplified our architecture, improved performance, and enabled new features that will enhance the user experience.

The project showcases how technical investments can both improve platform stability and unlock new product capabilities. As one of our engineers noted in the shipped announcement: "We will milk the value of this project for the years to come!"